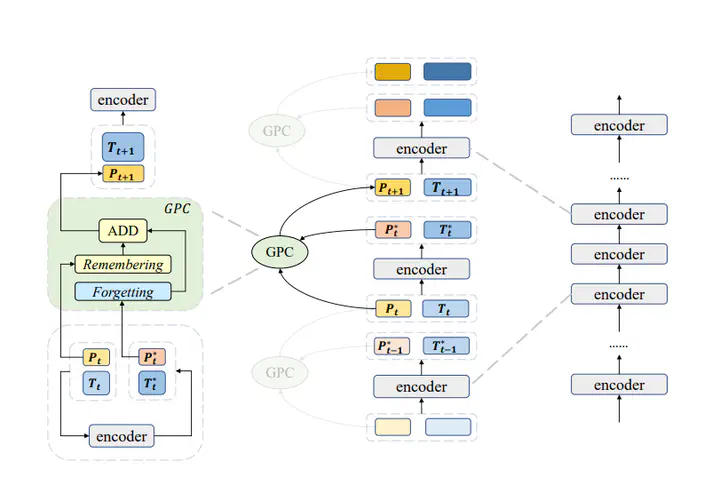

Global Prompt Cell Model (best viewed in color)

Global Prompt Cell Model (best viewed in color)Abstract

As a novel approach to tuning pre-trained models, prompt tuning involves freezing the parameters in downstream tasks while inserting trainable embeddings into inputs in the first layer. However, previous methods have mainly focused on the initialization of prompt embeddings. The strategy of training and utilizing prompt embeddings in a reasonable way has become a limiting factor in the effectiveness of prompt tuning. To address this issue, we introduce the Global Prompt Cell (GPC), a portable control module for prompt tuning that selectively preserves prompt information across all encoder layers. Our experimental results demonstrate a 5.8% improvement on SuperGLUE datasets compared to vanilla prompt tuning.

Type

Publication

In The 12th CCF International Conference on Natural Language Processing and Chinese Computing